[6 of 6] NVENC support with Virtual Apps 1808 (former XenApp)

Happy New Year to everyone! Thought I would have some time to finish the blog post series during Christmas holiday but I didn’t take my 3 little kids into consideration 🙂 So it’s now the first thing to finish this blog post.

Why is it worth to mention NVENC support for Virtual Apps at all?

If you followed the blog series you will have seen the benefits from NVENC (NVIDIA Encoding) for VDI. In short:

- Reduce CPU load for Encoding (->better user density)

- Reduce Latency for Encoding (->better user experience)

- Better/constant FPS (->better user experience)

RDSH works differently compared to VDI and uses a such called “offscreen buffer” instead of the possibility to leverage NVFBC. Therefore we are not able to use “native” NVENC. That said we couldn’t benefit from the mentioned advantages with previous releases of XenApp. For 1808, Citrix implemented a solution to benefit at least partly from our NVENC.

Why partly? As we don’t have “native” NVENC due to the offscreen buffer handling we don’t really benefit from latency reduction as we need to copy the capture data between system memory and GPU memory. But we can benefit from CPU reduction for Encoding and we will also see better user experience.

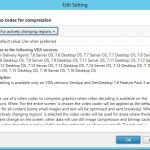

Citrix Policy Set

For XenApp workloads it makes no sense to use H.264 as default. We expect office workloads most of the time in RDS sessions and therefore it is important to have the best image quality possible which leads to bitmap remoting. But multimedia content is getting more popular even for XenApp workloads and here we can benefit from video codecs like H.264. So this is the perfect use case for the “active changing regions” Adaptive Display explained in the previous blog post.

- Use video codec for compression -> For active changing regions

- Use hardware encoding ->Enabled

- Visual Quality ->High

Impact

To see the impact on NVENC I did a quick test with the known 2:30min video playback in window mode with and without NVENC usage and used GPUProfiler and RD Analyzer to compare the results.

As we can see on the screenshots the main difference visible is the CPU encoder load which is slightly reduced with NVENC. Total CPU savings during this test is 4-5% CPU for this single user. So this is not much but if we scale up to 30 CCUs there might be a greater impact.

In addition the test case with NVENC showed a more consistent load. I didn’t recognize a better FPS for this test with NVENC but I already heard from customers that they achieved smoother playback with Youtube videos, for example.

Conclusion

Availability of NVENC with Virtual Apps is an interesting option to look at. We won’t get the same advantages that we get on a VDI with NVFBC enabled but we may save some CPU load and/or increase the user experience which should be the primary goal. I don’t have reliable load data yet for multiple CCU on a single VM with NVENC enabled so you should be carefully testing before running in production but I would give it a try.

Best regards

Simon

Total Users : 131884

Total Users : 131884 Views Today : 26

Views Today : 26 Total views : 311463

Total views : 311463

Thank you for the informative article series.

Should we enable nvfbc along side nvenc?

Hi,

NVFBC is required for client OS but Server OS is different as we only have “offscreen buffer”. So the NVENC implementation is different here.

regards

Simon

Hi Simon,

Thank you for the very informative blog series. It cuts right to the point in many areas.

I had a couple of questions for you:

1) Have you done any analysis to understand whether XenDesktop or View have an edge in terms of Application level latency for virtual desktops? we are currently performing an in-depth analysis in our environment and initial results conclude that for our environment View may have an edge in lower latency higher bandwidth scenarios. I suppose this could be due to tighter coupling to the operating system level you might expect with vSphere as your hypervisor.

2) Are there any other areas you might expect an outright performance difference between the two? we haven’t covered the higher latency lower bandwidth scenarios yet and I suspect this might an area where one is significantly different to the other.

Thanks again for the efforts in compiling this information!

Hi Dustin,

I’ve not tested the latency between XenApp and View in RDSH deployments. I would expect not much difference as long as we compare the same protocol.

How do you test the latency? We are using such called “click to photon” to compare the latency.

In general, on RDSH I would concentrate on image quality. Citrix provides much more options in this area compared to Horizon. To get the “necessary” image quality for

RDSH users you need to run JPG/PNG fallback which requires a lot of bandwidth.

regards

Simon

Hi Simon,

Thanks for the quick response – here I am referring to ICA RTT and borrowing from a Citrix KB:

https://support.citrix.com/article/CTX204274

…

6. Server (XA/XD) OS introduced delay (Host Delay)

My question is for the same hardware and network latency (Large bandwidth = 200MBit, Fixed / Low latency < 35ms) scenario, would you expect the "Host Delay" component to be less for a View desktop versus Citrix Xendesktop VDA because of the the more native integration between the View Agent and Vmtools (and the underlying Vsphere hypervisor) ?

Hopefully that question is clear?

Thanks for your time,

Dustin

And one more follow up question – which Nvidia desktop class display adaptors / gpus support YUV444 client side hardware decoding today?

Thanks for your help with this,

Dustin

As far as I know there is no NVDEC support for H264 YUV444. With Turing architecture we introduced H265 YUV444 decoding but there is no remoting protocol that supports it on the encoding side. Anyways I don’t see YUV444 as a valid protocol for RDSH deployments. Thinwire is much better and requires no specific endpoint capabilities and less CPU load but provides the best possible image quality.

Not that I’m aware of. A lot of customers are running Citrix on vSphere without issues.

Thank you for the very informative blog series. Keep posting! can i share it?

why you can benefit from CPU reduction for Encoding?

Thanks again for the efforts that went into writing this blog.

I wanted to revisit this question and ask a few further:

– As far as I know there is no NVDEC support for H264 YUV444, is this still true? what about Nvidia P400?

– How can I enable client side H265 YUV444 decoding?

Hi Dustin,

There is no change in support for H264 YUV444 for decoding. In general there is no use case for it. See the complete list here:

https://developer.nvidia.com/video-encode-decode-gpu-support-matrix

Also no support for H265 YUV444 on Citrix side. Pure hardware support (from NV side) doesn’t mean it is also avail from the VDI vendor.

Regards

Simon